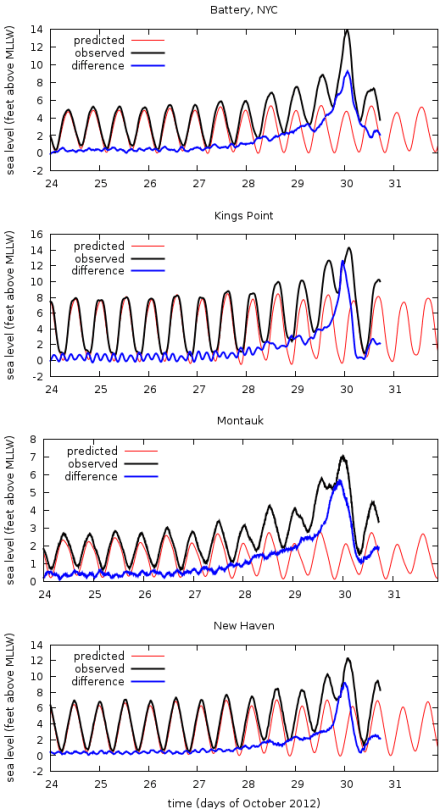

Thanks to the excellent data/interface provided by NOAA (click here for Montauk data), I was able to throw these plots together.

I have family on Long Island. As I understand it, if Hurricane Sandy’s storm surge is more than ~6 feet above high tide, the house will flood. So, I’m interested in the current ocean level. It’s still early in the storm, but the plots don’t look good.

For realtime updates, click the NOAA link above.

Plots updated 10:45 AM PDT 10/30/2012, final update. Status of the house is unknown, but everyone’s fine. Note small after-surge that’s happened today. Neat.

Measured sea level at The Battery, Montauk, Kings Point, and New Haven during Hurricane Sandy. Data are NOAA measurements. ‘MLLW’ is “Mean Lower Low Water”

Good luck to everyone on the East Coast!